Xiao Qin's Research

Final Report

BUD: A Buffer-Disk Architecture for Energy Conservation in Parallel Disk Systems

Findings

An Energy-Efficient Framework for Large-Scale Parallel Storage Systems

A Simulation Framework for Energy-efficient Data Grids

An Energy-Efficient Scheduling Algorithm Using Dynamic Voltage Scaling

for Parallel Applications on Clusters

Load-Balancing Strategies for Energy-Efficient Parallel Storage Systems with Buffer Disks

Sacrificing Reliability for Energy Saving: Is It Worthwhile for Disk Arrays

Load-Balancing Strategies for Energy-Efficient Parallel Storage Systems with Buffer Disks

Energy-Efficient Prefetching for Parallel I/O Systems with Buffer Disks

Improving Reliability and Energy Efficiency of Disk Systems via Utilization Control

Energy Conservation for Real-Time Disk Systems with I/O Burstiness

An Adaptive Energy-Conserving Strategy for Parallel Disk Systems

DARAW: A New Write Buffer to Improve Parallel I/O Energy-Efficiency

MICRO: A Multi-level Caching-based Reconstruction Optimization for Mobile Storage Systems

PEARL: Performance, Energy, and Reliability Balanced Dynamic Data Redistribution for Next Generation Disk Arrays

HyBUD: An Energy-Efficient Architecture for Hybrid Parallel Disk Systems

Energy-Aware Prefetching for Parallel Disk Systems

DORA: A Dynamic File Assignment Strategy with Replication

Collaboration-Oriented Data Recovery for Mobile Disk Arrays

A File Assignment Strategy Independent of Workload Characteristic Assumptions

References

[Roth et al., 2008] Energy conservation has become a critical problem for real-time embedded storage systems. Although a variety of approaches to reducing energy consumption has been extensively studied, energy conservation for real-time embedded storage systems is still an open problem. In this research, we propose an energy management strategy (IBEC) using I/O burstiness for real time embedded storage systems. Our approach aims at blending the IBEC energy management strategy with low level disk scheduling mechanism to conserve energy consumed by storage systems.

The left Figure shows the power consumption of these four algorithms when average request deadline varies from 75 ms to 25000 ms. We observe from this Figure that each of the four algorithms consumes the same amount of power at the maximal level when the average request deadline is less than 3500 ms. This is because the hard-disk has to be kept active all the time to service the arrival disk requests which have very tight deadlines. In other words, there is no opportunity for IBEC to conserve some power. Therefore, IBEC gracefully degraded to existing power-aware scheduling algorithms like DP-EDF and PA-EDF. When the average request deadline is equal to or larger than 3500 ms, however, IBEC starts to conserve some energy while the three baseline algorithms remain the same performance in power consumption. We attribute the PF improvement of IBEC over the three baseline algorithms to the fact that IBEC judiciously employ the loose deadlines to conserve some energy. More interestingly, the improvement of IBEC over the three existing schemes in terms of PF is more pronounced when the deadline becomes looser for IBEC can further improve its power consumption performance when more slack time is available. On average, IBEC can save 10.8% power compared with the baseline algorithms.

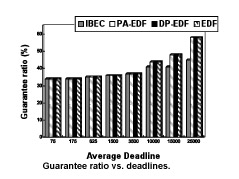

The left Figure plots GR of the four algorithms when the deadline is increased from 75 to 25000 ms. It reveals that IBEC performs exactly the same, with respect to GR, as all the rest approaches when the deadline is less than 1000 ms. The reason is that the relatively high workload along with the tight deadlines make IBEC only concentrate on guaranteeing arrival requests’ timing constraints, which have a higher priority than power conservation requirement. However, the GR performance of IBEC suddenly drops off when the deadline is 10,000 ms. In fact, this is an artifact of our specific implementation of the IBEC algorithm. In order to keep simulation times manageable and to closely approximate a real system where no infinite amount of time is available to re-evaluate the schedulability of a queue, we limited the maximum number of requests that IBEC would ensure their deadline constraints. In particular, when the length of the waiting queue of requests is larger than 1,000, our implementation of IBEC will no longer guarantee the schedulability of requests after the 1,000th.

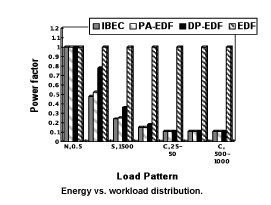

From the following Figure, we can make three important observations. First, all algorithms perform identically in power consumption under the Normal Distribution. Second, the three power-aware algorithms noticeably outperform the EDF scheme, which has no power-awareness at all, when the Sparse Distributions were applied. This is because the nature of the Sparse Distribution decides a relatively large time interval between two continuous disk requests, which in turn gives the three power-aware algorithms chances to switch the hard-disk to “sleep” mode to save energy. Furthermore, IBEC slightly outperforms DP-EDF and PA-EDF, two naïve power-aware algorithms. The rationale behind this phenomenon is that IBEC can make most use of the slack time of each arrival request. Put it in another way, IBEC only wakes up the disk at the last second from which all arrived requests’ deadlines can still be met, while DP-EDF only lets the disk sleep for a fixed period of time no matter whether a request is waiting for service or not. Third, for clustered workloads, IBEC and the two naive power-aware techniques perform comparably, and they both significantly perform better than EDF. This is due to the fact that IBEC, DP-EDF, and PA-EDF can put the disk to “sleep” status completely between clusters of requests. Thus, the three power-aware algorithms can substantially save power compared with EDF. The reason why IBEC ties with DP-EDF and PA-EDF is that the performance improvement of IBEC in terms of power consumption essentially depends on slack times of arrival requests rather than the arrival patterns.

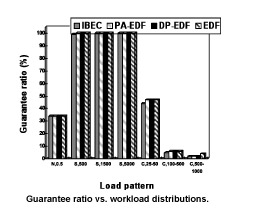

The results reported in the left Figure reveal that all of the four algorithms deliver a 100% guarantee ratio under the Sparse Distribution. The reason for this is that the average request deadline is generally much shorter than the sparse idle threshold, which means that even though IBEC aggressively slows down the processing pace of disk requests, their deadlines can still be satisfied. When we applied a Cluster Distribution pattern, the performance of the four algorithms goes down when the parameters of the Cluster Distribution increase. This is because there were a large number of requests arrived during a burst of incoming requests. Consequently, all the algorithms can only guarantee the deadlines of a small part of them.

[Liu et al., 2008a] Reducing energy consumption has become a pressing issue in cluster computing systems not only for minimizing electricity cost, but also for improving system reliability. Therefore, it is highly desirable to design energy-efficient scheduling algorithms for applications running on clusters. In this project, we address the problem of non-preemptively scheduling mixed tasks on power-aware clusters. We developed an algorithm called Power Aware Slack Scheduler (PASS) for tasks with different priorities and deadlines. PASS attempts to minimize energy consumption in addition to maximizing the number of tasks completed before their deadlines. To achieve this goal, high-priority tasks are scheduled first in order to meet their deadlines. Moreover, PASS explores slacks into which low-priority tasks can be inserted so that their deadlines can be guaranteed. The dynamic voltage scaling (DVS) technique is used to reduce energy consumption by exploiting available slacks and adjusting appropriate voltage levels accordingly.

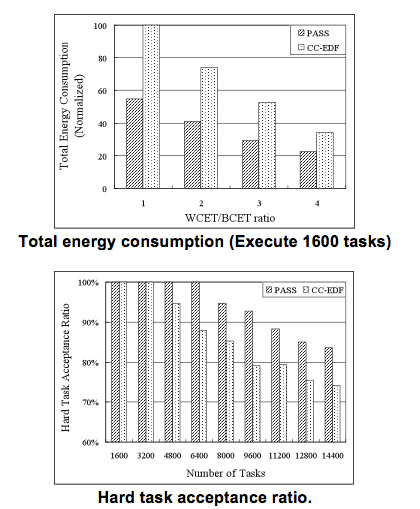

The following Figure shows the total energy consumed to execute 1600 tasks. We observe that PASS saves up to 60 percent of the energy over CC-EDF. The reason that PASS can achieve such significant energy savings is because PASS creates large integrated slacks by scheduling tasks to the latest possible start time. CC-EDF schedules tasks according to the rule of Minimum Completion Time (MCT) which always schedules a task to its earliest possible start time. In this way, EDF hardly leaves any slack time that may be used by the DVS technique.

Now we compare the performance of PASS against CC-EDF. We performed simulations for different task loads in order to examine the performance consistency. With respect to Hard Task Acceptance Ratio, from the above Figure we observe that PASS yields 10% better performance on average than CC-EDF. When the number of tasks is below 6400, PASS guarantees that all hard tasks can meet their deadlines. As the number of tasks further increases, PASS is still able to schedule most of the hard tasks while EDF is no longer able to reach similar performance level. The reason is because PASS always schedules hard tasks first, which helps meet hard tasks’ deadlines. CC-EDF does not consider task priority, which results in a number of un-schedulable hard tasks.

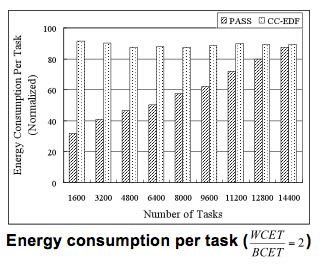

The Figure below shows both algorithms can not schedule all tasks when the number of tasks exceeds 3200. It becomes unfair if we compare two algorithms using the Total Energy Consumption metric, since the number of accepted tasks by using PASS is different from the number by using CC-EDF. Instead, we use the Energy Consumption per Task as the metric. In this way, we are able to study the effect brought by the number of tasks on energy consumption.

As shown in the following Figure, PASS consistently performs better than CC-EDF with respect to Energy Consumption per Task. It is again because PASS decreases the processor speed for each task by utilizing the corresponding slack time. An interesting observation is that as the number of tasks increases, the Energy Consumption per Task achieved by PASS also increases. Once there are more incoming tasks, less slack times will be available since more tasks need to be scheduled within those slack times.