- Spring 2022

A Comparison of CTIndex Devices

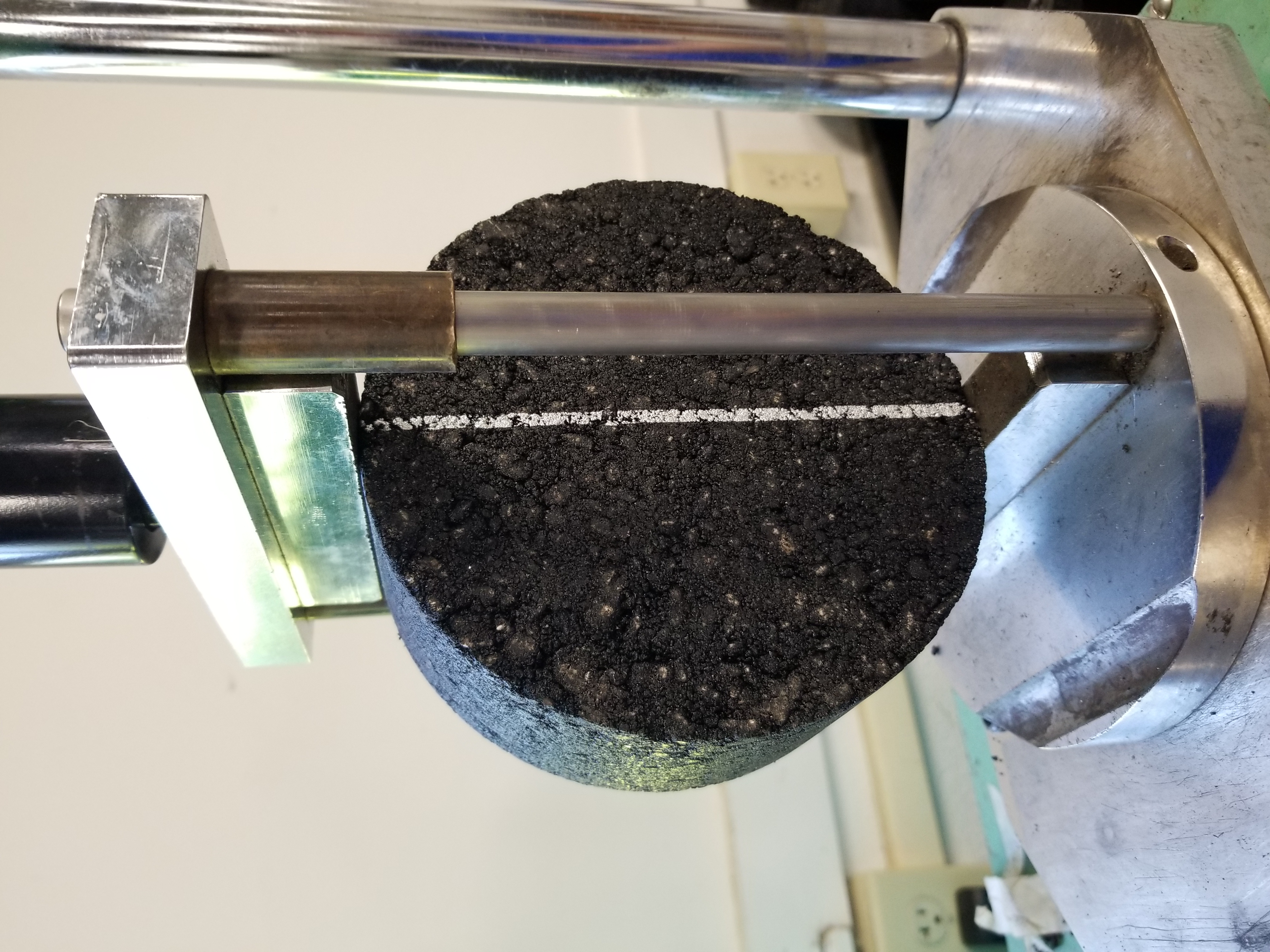

Imagine a scenario where a contractor works on a balanced mix design and finally gets that mix to pass an agency’s IDEAL-CT criteria. Then, after submitting specimens to the agency for approval, the agency’s results fail the mix design criteria. What happened? When the contractor and agency discuss the results, they realize both sets of specimens were tested with machines from different manufacturers.

Could using different devices be the reason for the discrepancy between the two labs? How can we ensure that different machines will provide equivalent results? A recent NCAT study could help resolve this situation, where six different devices were evaluated to assess how much they could affect the overall variability of IDEAL-CT results.

Variability in IDEAL-CT test results can come from many sources: operator, materials, specimen preparation, equipment differences, etc. Specimen preparation is known to have a large effect on IDEAL-CT results. For this study, careful attention to detail was given to making the specimens by using a single technician, using the same specimen preparation equipment and oven heating times, and by randomizing the specimens to be tested among the six devices.

When investigating differences in test results due to devices, analysis should include data from a variety of mixtures with results ranging from low to high. In this study, eight replicates from seven different mixtures were tested on each device by the same technician. Due to natural variability, results for each mixture will differ from device to device. Although replicates from a mix may be repeatable within the specific devices, there still may be differences when comparing results between devices. The concern is when one device consistently yields results that are higher or lower than another device. So how much of a difference can be tolerated?

Statistical equivalence is not a term used often in materials testing. This is the idea that results are considered equivalent when the differences between them are practically irrelevant. For example, if Sample A has an average CTIndex of 95 and Sample B has an average CTIndex of 93, is this two-unit difference large enough to be considered important given the test's variability? To establish a limit for acceptable difference, the analysis used the average within-lab variability (measured in COV%) from the 2018 NCAT Round Robin Study, where the average within-lab COV was 18%. Therefore, when two devices can consistently produce mean results less than or equal to 18%, they should be considered equivalent.

Two key findings came from this study. First, some devices had average loading rates faster than the 50 ± 2 mm/min currently specified in ASTM D8225. All of the measured speeds from over 300 IDEAL-CT tests fell between 49 and 53 mm/min. Thus, although all the rates do not meet the standard’s specified range of 48-52 mm/min, the devices are still operating within the maximum allowable 4 mm/min tolerance window. There was no discernable effect of speed on the final CTIndex results for each mix because the devices were operating similarly. Second, using the Two One-Sided Test (TOST) equivalence test, all but one of the devices were found to provide equivalent results. When the specific manufacturer was made aware of this issue, a flaw in the data collection system was found and the issue was corrected. Although there were differences up to 5 CTIndex units present in the final comparisons, these differences were not large enough to be considered relevant given the variability of the test. Thus, the study indicates that different devices can be trusted to yield equivalent results.

These findings do not mean that differences won’t occur, and it’s important to investigate large variances between comparison testing results. Following preparation and testing best practices will greatly reduce the chances of having a wide range between specimens from the same mix sample. It is highly recommended that when specimens are to be tested between two different devices, they should be prepared at the same time and under the same conditions to reduce variability between the split samples.

For more information about this article, please contact Nathan Moore.