Project Description

l-RIM: Learning based Resilient Immersive Media-Compression, Delivery, and Interaction

Augmented Reality/Virtual Reality (AR/VR) has been recognized as a transformative service for the Next Generation (NextG) network systems, while wireless supported AR/VR will offer great flexibility and enhanced immersive experience to users and unleash a plethora of new applications. The primary goal of this project is to explore innovative technologies in NextG and Artificial intelligence (AI) to provide a unified media compression, communication, and computing framework to enable resilient wireless AR/VR.

May 1, 2022 ~ Apr. 30, 2026

Project Team

-

Anthony Chen

-

Chris Henry

-

Biren Kathariya (graduated 2024)

-

Yangfan Sun (graduated 2023)

-

Ticao Zhang (graduated 2022)

Related Publications (journal & magazine)

-

J. Wang, H. Du, D. Niyato, Z. Xiong, J. Kang, S. Mao, and X. (S.) Shen, "Guiding AI-generated digital content with wireless perception," IEEE Wireless Communications, to appear.

-

M. Xu, H. Du, D. Niyato, J. Kang, Z. Xiong, S. Mao, Z. Han, A. Jamalipour, D.-I. Kim, X. (S.) Shen, V.C.M. Leung, and H.V. Poor, "Unleashing the power of edge-cloud generative AI in mobile networks: A survey of AIGC services," IEEE Communications Surveys and Tutorials, to appear.

-

B. Kathriya, Z. Li and G. van der Auwera, "Joint pixel and frequency feature learning and fusion via channel-wise transformer for high-efficiency learned in-loop filter in VVC," IEEE Transactions on Circuits & Systems for Video Technology, to appear.

-

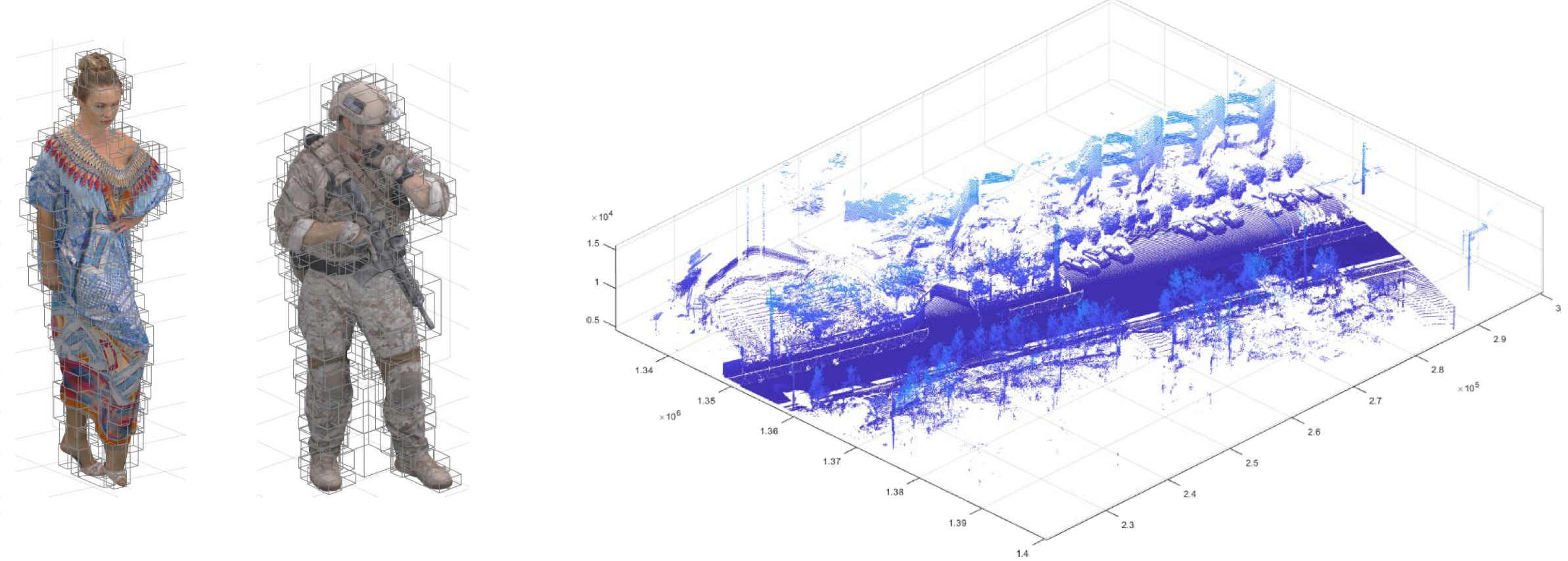

A. Akhtar, Z. Li, and G. van der Auwera, "Inter-frame compression for dynamic point cloud geometry coding," IEEE Transations on Image Processing, vol.33, no.1, pp.584--594, Jan. 2024.

-

Minrui Xu, Dusit Niyato, Hongliang Zhang, Jiawen Kang, Zehui Xiong, Shiwen Mao, and Zhu Han, "Sparks of generative pretrained transformers in edge intelligence for the Metaverse: Caching and inference for mobile artificial intelligence-generated content services," IEEE Vehicular Technology Magazine, Special Issue on Metaverse for Connected and Automated Vehicles and Intelligent Transportation Systems, vol.18, no.4, pp.35-44, Dec. 2023.

-

Qingpei Luo, Hongliang Zhang, Boya Di, Minrui Xu, Anthony Chen, Shiwen Mao, Dusit Niyato, and Zhu Han, "An overview of 3GPP standardization for extended reality (XR) in 5G and beyond," ACM GetMobile, vol.27, no.3, pp.10-17, Sept. 2023.

-

Anthony Chen, Shiwen Mao, Zhu Li, Minrui Xu, Hongliang Zhang, Dusit Niyato, and Zhu Han, "An introduction to point cloud compression standards," ACM GetMobile, vol.27, no.1, pp.11-17, Mar. 2023.

-

M. Xu, D. Niyato, B. Wright, H. Zhang, J. Kang, Z. Xiong, S. Mao, and Z. Han, “EPViSA: Efficient auction design for real-time physical-virtual synchronization in the metaver,” IEEE Journal on Selected Areas in Communications, Special Issue on Human-Centric Communication and Networking for Metaverse over 5G and Beyond Networks, vol.42, no.3, pp.694-709, Mar. 2024

-

M.Xu, D. Niyato, J. Chen, H. Zhang, J. Kang, Z. Xiong, S. Mao, and Z. Han, “Generative AI-empowered simulation for autonomous driving in vehicular mixed reality metaverses,” IEEE Journal of Selected Topics in Signal Processing, Special Issue on Signal Processing for XR Communications and Systems,vol.17, no.5, pp.1064-1079, Sept. 2023.

-

Q.Luo, H. Zhang, B. Di, M. Xu, A. Chen, S. Mao, D. Niyato, and Z. Han, “An overview of 3GPP standardization for extended reality (XR) in 5G and beyond,” ACM GetMobile, vol.27, no.3, pp.10-17, Sept. 2023.

-

Y. Sun, L. Li, Z. Li, S. Wang, S. Liu, and G. Li, “Learning a compact spatial-angular representation for light field,” IEEE Transactions on Multimedia, vol.25, pp.7262-7273, Nov. 2022.

-

Y. Sun, Z. Li, S. Wang, and W. Gao, “Learning-based depth-guided light field factorization for compressive light field display,” Optical Express, Vol. 31, Issue 4, pp. 5399-5413, Feb. 2023.

-

A. Chen, S. Mao, Z. Li, M. Xu, H. Zhang, D. Niyato, and Z. Han, “An introduction to point cloud compression standards,” ACM GetMobile, vol.27, no.1, pp.11-17, Mar. 2023.

-

H. Zhang, S. Mao, D. Niyato, and Z. Han, “Location-dependent augmented reality services in wireless edge-enabled metaverse systems,” IEEE Open Journal of the Communications Society, vol.4, pp.171-183, Jan. 2023. DOI: 10.1109/OJCOMS.2023.3234254.

-

Y. Sun, J. Chen, Z. Wang, M. Peng, and S. Mao, “Enabling mobile virtual reality with Open 5G, fog computing and reinforcement learning,” IEEE Network, vol.36, no.6, pp.142-149, Nov./Dec. 2022. DOI: 10.1109/MNET.010.2100481.

-

J. Dai, G. Yue, S. Mao, and D. Liu, “Sidelink-aided multi-quality tiled 360° virtual reality video multicast,” IEEE Internet of Things Journal, vol.9, no.6, pp.4584-4597, Mar. 2022. DOI: 10.1109/JIOT.2021.3105100.

Related Publications (conference)

-

A. Chen, S. Mao, Z. Li, M. Xu, H. Zhang, D. Niyato, and Z. Han, “Multiple description coding for point cloud,” in Proc. IEEE ICC 2024, Denver, CO, June 2024.

-

F. Quazi, A. Vaequez-Castro, D. Niyato, S. Mao, and Z. Han, “Building delay-tolerant digital twins for cislunar operations using age of synchronization,” in Proc. IEEE ICC 2024 Workshop Cooperative Communications and Computations in Space-Air-Ground-Sea Integrated Networks (CCCSAGSIN), Denver, CO, June 2024.

-

R. Liao, Z. Li, S. Battachayya, and G. York, "ViewDiffGait: View pyramid diffusion for gait recognition," IEEE Automatic Face and Gesture Recognition (FG), Istanbul, Turkey, May 2024.

-

M. Xu, D. Niyato, H. Zhang, J. Kang, Z. Xiong, S. Mao, and Z. Han, “Joint foundation model caching and inference of generative AI services for edge intelligence,” Proc. IEEE GLOBECOM 2023, Kuala Lumpur, Malaysia, Dec. 2023, pp.3548-3553.

-

P. Maharjan, L.T. Vanfossan, Z. Li, and J. Shen, “Fast LoG SIFT keypoint detector,” IEEE Multimedia Signal Processing (MMSP) Workshop, Poitiers, France, Sept. 2023.

-

P. Chen, B. Chen, M. Wang, S. Wang, and Z. Li, "Visual Data Compression for Metaverse: Technology, Standard, and Challenges," in Proc. IEEE MetaCom 2023, Kyoto, Japan, June 2023, pp.360-364.

-

C. Henry, M. S. Asif, and Z. Li, "Privacy preserving face recognition with lensless camera," in Proc. IEEE Int'l Conf on Audio, Speech and Signal Processing (ICASSP'23), Rhode Island, Greece, June 2023, pp.1-5.

-

C. Henry, M. S. Asif, and Z. Li, "Light-weight fisher vector transfer learning for video deduplication," in Proc. IEEE Int'l Conf on Audio, Speech and Signal Processing (ICASSP'23), Rhode Island, Greece, June 2023, pp.1-5.

-

R.Puttagunta, Z. Li, S. Battacharyya, and G. York, "Appearance label balanced triplet loss for multi-modal aerial view object classification," in Proc. IEEE CVPR Workshop on Perception Beyond Visual Spectrum (PBVS'23), Vancouver, Canada, June 2023.

-

M. Xu, D. Niyato, H. Zhang, J. Kang, Z. Xiong, S. Mao, and Z. Han, “Generative AI-empowered effective physical-virtual synchronization in the vehicular metaverse,” in Proc. International Conference on Metaverse Computing, Networking and Applications (MetaCom 2023), Kyoto, Japan, June 2023, pp.607-611.

-

Z. Liu, F. Li, Z. Li, and B. Luo, “LoneNeuron: a highly-effective feature-domain neural trojan using invisible and polymorphic watermarks,” in Proc. ACM Conference on Computer and Communications Security (CSS'22), Los Angeles, CA, Nov. 2022, pp.2129-2143.

-

B. Kathariya, Z. Li, H. Wang, G. Van Der Auwera, “Multi-stage locally and long-range correlated feature fusion for learned in-loop filter in VVC,” in Proc. IEEE International Conference on Visual Communications and Image Processing (VCIP'22), Suzhou, China, Dec. 2022, pp.1-5

-

B. Kathariya, Z. Li, H. Wang, and M. Coban, “Multi-stage spatial and frequency feature fusion using transformer in CNN-based in-loop filter for VCC,” in Proc. IEEE Picture Coding Symposium (PCS'22), San Jose, CA, Dec. 2022, pp.373-377.

Related Publications (others)

-

B. Kathariya, "multi scale learning and channelwise transformer in dense prediction," PhD Dissertation, University of Missouri, Kansas City, 2024

-

Y. Sun, "Deep learning-based optimization of light field processing," PhD Dissertation, University of Missouri, Kansas City, 2023

-

H. Zhang, Z. Han, D. Niyato, and S. Mao, “Wireless technologies for metaverse,” Chapter 4 in Metaverse Com-munication and Computing Networks: Applications, Technologies, and Approaches, D.T. Hoang, D.N. Nguyen, C.T. Nguyen, E. Hossain, and D. Niyato (editors), Hoboken, NJ: John Wiley & Sons, 2023.

-

Dr. Zhu Li's AI based dynamic point cloud geometry compression technique, in collaboration with Qualcomm’s video team, has been adopted in MPEG AI based PCC test model.

We acknowledge the generous support from our sponsor

This material is based upon work supported by the National Science Foundation under grant CNS-2148382, and is supported in part by funds from OUSD R&E, NIST, and industry partners as specified in the Resilient & Intelligent NextG Systems (RINGS) program. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the foundation.